Proof-of-Security: How Hybrid Analytics Make Cyber Defense Auditable

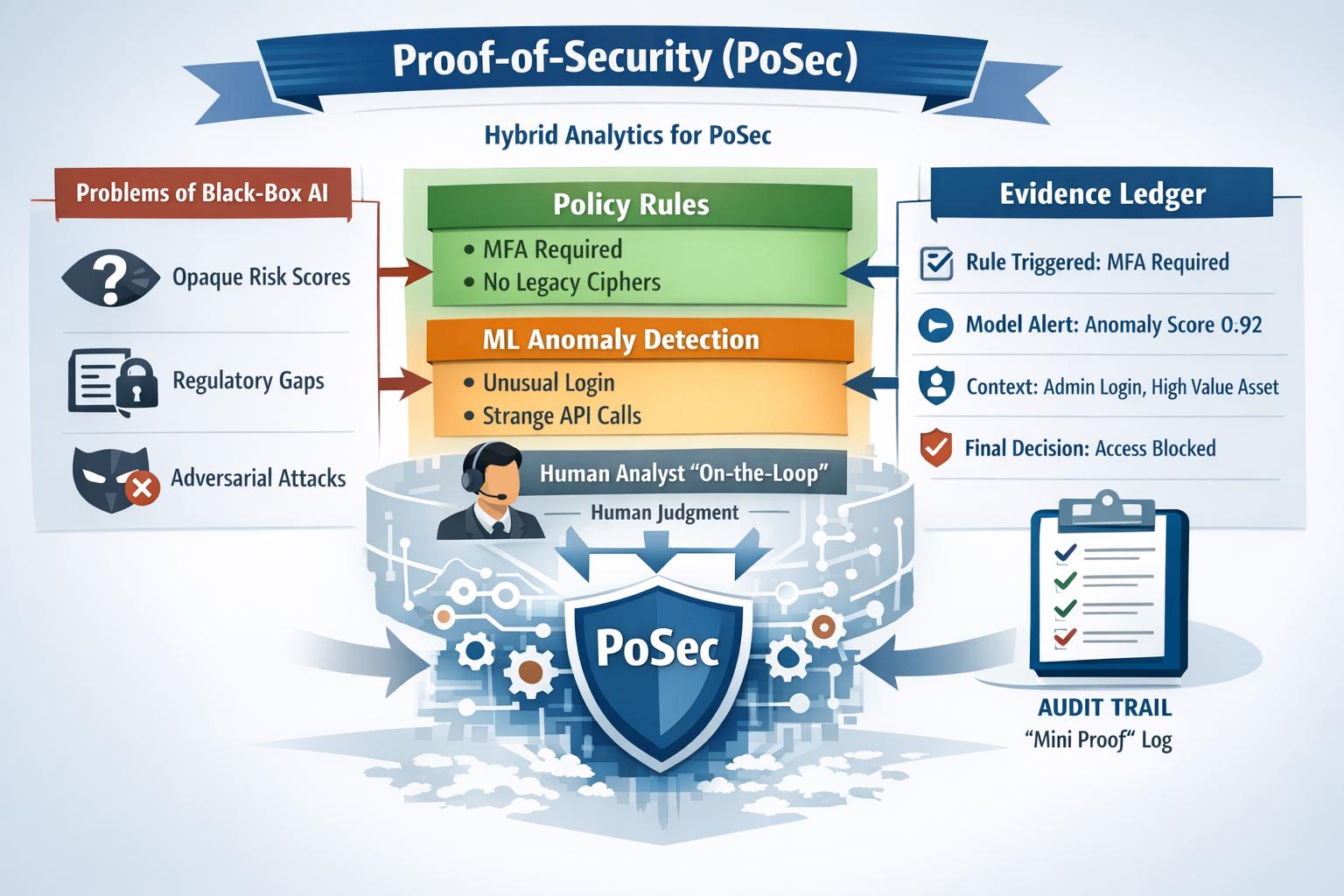

Proof-of-Security (PoSec) reframes “AI for cyber defense” from chasing detection accuracy to producing decisions that can be explained and audited. It argues black-box models fail in practice because they obscure risk posture, create compliance gaps (e.g., traceability expectations in NIST guidance), and remain fragile to adversarial manipulation especially as attackers weaponize AI. PoSec instead uses hybrid analytics (deterministic policy rules + ML anomaly detectors + humans on-the-loop) where combination logic is explicit and rules can dominate high-severity cases. An evidence ledger then logs which rules/models fired, key context, and the final action, turning each alert into a reconstructable “mini proof” suitable for operations and audits.

AI has become the new marketing glitter in cybersecurity. Vendors promise “self-defending networks” and “autonomous SOCs,” usually powered by opaque models that generate risk scores nobody can really explain. For a safety-critical function like security, that is a problem, not a feature. “Proof-of-Security” (PoSec) is a different way to think about AI in defense: instead of asking “can the model detect threats?”, the focus shifts to ‘can the system demonstrate and audit why it behaves the way it does? Moving from black-box AI to PoSec means designing hybrid analytics and explicit evidence, not just clever models.

The limits of black-box AI for cyber defense

Modern Machine Learning (ML) excels at pattern recognition across huge telemetry streams: network flows, Endpoint Detection and Response (EDR) logs, identities, cloud configurations. Surveys on AI in cybersecurity repeatedly show strong gains in detection, especially for anomalies and zero-days [1].

But black-box use comes with three hard failures:

-

Unclear risk posture: If analysts can’t see which features drove an alert, they can’t judge whether it is a real incident or just noisy behaviour. Explainable AI (XAI) surveys call this as the primary barrier to operational adoption [2].

-

Regulatory blind spots: National Institute of Standards and Technology (NIST)’s AI Risk Management Framework (RMF) stresses traceability, transparency, and documentation across the AI lifecycle, especially for high-impact use cases like security [3]. A Security Operations Center (SOC) that “just trusts the model” has no story when auditors ask why a user was blocked or an anomaly ignored.

-

Adversarial fragility. NIST’s adversarial machine-learning taxonomy catalogues how models can be poisoned, probed, and evaded [4]. When all logic resides within a single model, attackers only need to exploit that model’s blind spots.

Meanwhile, attackers are already weaponizing AI itself, such as using agents to automate phishing, vulnerability discovery, and even partial end-to-end cyber operations [5]. Treating defensive AI as inscrutable magic in such environment is reckless.

Hybrid analytics: rules, models, and humans together

A hybrid approach starts from a simple observation: security isn’t just a pattern-recognition problem, it’s a policy and assurance problem.

A PoSec-oriented architecture typically has three layers:

-

Deterministic rules: These encode hard constraints can be tied to standards and policy: “no deprecated cipher,” “Multi Factor Authentication (MFA) is required for admin logins,” “no model training on unvetted data sources.” They are traceable and relatively easy to audit.

-

ML anomaly detectors: Watch for behavioural deviations the rules don’t capture, for example, unusual login timing, odd API sequences, strange model-usage patterns. ML anomaly detectors use classic or deep models, and treat their scores as inputs to a broader decision, not the final oracle.

-

Human analysts on-the-loop: Recent commentary on human-AI collaboration in cyber emphasizes that AI should triage and summarise, while people make the judgment calls and adapt the system [6].

Crucially, the combination logic is explicit. High-severity rules dominate; ML fills gaps and highlights gray zones. That aligns with the direction of NIST’s guidance and many industry risk frameworks.

The evidence ledger: from alerts to proofs

Hybrid analytics become auditable only when they are logged properly. That is where a security evidence ledger plays a critical role.

Instead of just “Alert: score 0.92,” each decision becomes a record containing:

-

which rules fired (IDs, severity, rationale)

-

which models contributed (name, version, score, key features)

-

relevant context (protocol, asset criticality, user role)

-

a final risk rating and action taken

This turns each incident into a self-contained “mini proof”: anyone can reconstruct how the system reasoned. XAI work in cybersecurity points to precisely this kind of contextual, case-based explanation as the most actionable for defenders [2].

The same idea applies on the AI security side. When models misbehave or are misused as documented in incident reports and safety case studies, it becomes important to identify which model version, which defences, and which inputs were involved [7]. A ledger that connects AI events to security telemetry provides the only realistic way to achieve this at scale.

Why PoSec matters now

Two trends collide here:

-

AI is lowering the barrier for large-scale, high-quality cyberattacks [5].

-

Regulators and standards bodies are moving toward evidence-based requirements for AI and security systems, not just promises of accuracy [3].

Proof‑of‑Security’ is what enables systems to withstand that collision. Hybrid analytics provide robustness, and the evidence ledger provides accountability. Together, they transform AI from a black box into something that can be defended, explained, and repaired when failures occur.

Edited By: Windhya Rankothge, PhD, Canadian Institute for Cybersecurity

References

[1] A COMPREHENSIVE SURVEY ON EXPLAINABLEAI IN CYBERSECURITY DOMAIN https://setsindia.in/wp-content/uploads/2024/06/XAI_Cybersecurity.pdf

[2] G. Rjoub et al., "A Survey on Explainable Artificial Intelligence for Cybersecurity," in IEEE Transactions on Network and Service Management, vol. 20, no. 4, pp. 5115-5140, Dec. 2023, doi: 10.1109/TNSM.2023.3282740.

[3] NIST, “AI Risk Management Framework” https://www.nist.gov/itl/ai-risk-management-framework

[4] NIST, “Adversarial Machine Learning: A Taxonomy and Terminology of Attacks and Mitigations“ https://doi.org/10.6028/NIST.AI.100-2e2025

[5] Anthropic, “Detecting and countering misuse of AI: August 2025” https://www.anthropic.com/news/detecting-countering-misuse-aug-2025

[6] Forcepoint “Reimagining Cyber Defence: The Rise Of Artificial Intelligence In Security” https://www.forcepoint.com/en-in/blog/insights/reimagining-cyber-defence-rise-artificial-intelligence-security

[7] Mia Hoffmann, “The Mechanisms of AI Harm: Lessons Learned from AI Incidents” https://cset.georgetown.edu/wp-content/uploads/CSET-The-Mechanisms-of-AI-Harm.pdf